Date of writing: 4th November 2018

Last edit: 4th November 2018

JUST GIVE ME THE SCRIPT AND CONFIG

If all you need are the logstash configuration and script to generate the translate data, go here:

https://github.com/kevinwilcox/python-elk

It is in the directory called "crawl_ad_ldap"!

Everyone Else 8^)

I recently tweeted about something that popped up in my day job and promised a bit more detail in a blog post. It has sort of worked out "to the good" because I've mentioned wanting to cover enrichment with AD info before but this really pushed that into overdrive...so here we are!

The Problem

When I talk to folks about what they're logging, and why they want to use something like Elastic, Splunk, GrayLog, etc., there are a few log types that regularly pop up. It seems like everyone wants to pull login logs, be they 4624 events in Windows or SSH logins from the *nix world (I know, OpenSSH has been ported to Windows). Both of these use pretty well-defined formats -- even though openssh logs to auth.log (generally) and uses syslog (generally), the format of an SSH success or failure is pretty consistent.

For the purpose of this post, we're going to focus on one field that's common to both Windows 4624 events and SSH authentications - the username field.

Let's make things a bit "real life". Think about the scenario where you want to search for every login to a system in Human Resources, or for every login by a user in Finance and Payroll. You would need to pull every login over <x> time period, do an OU lookup for every workstation name or username and then discard anything not in the OU you care about, or you'd need to pull a list of each user in those specific OUs and look for logins for those users. Those methods are pretty traditional but I was 100% sure there's a better way using modern tools (specifically, using my SIEM of choice - Elastic).

Method One - Elasticsearch

My initial solution was to use python to crawl Active Directory and LDAP for all computer and user objects (and the properties I need), cache that data locally in Elasticsearch and then query for the relevant data each time logstash parses or extracts a username field. By doing that I can somewhat normalise all of my login logs - and then it doesn't matter if I know what all of the OUs or groups are in my organisation, or who is a member of which one, as long as I have mostly-current information from AD and LDAP.

I figured I already did this with OUI info for DHCP logs and Elasticsearch was performing great so doing it for username fields shouldn't be a big issue, right? I'd just finished writing the python to pull the data and was working on the filter when I had a chat with Justin Henderson, author of the SANS SEC555 course, and he completely changed my approach.

Method Two - Translate

Justin recommended I try the translate filter. I already used the filter for login logs to add a field with the description for each login type so I wasn't completely new to using it but I had never used it with a dictionary file. After chatting with him and reading the documentation for a bit, I realised I could create a dictionary file that looked like this:

username_0:

distinguished_name: username_0, ou=foo, ou=foo2, ou=org, ou=local

member_of:

- cn=foo

- cn=another_foo

- cn=yet_another_foo

username_1:

distinguished_name: username_1, ou=foo3, ou=foo4, ou=org, ou=local

member_of:

- cn=foo3

Then any time logstash see username_0 in the "username" field, it can add the distinguished_name and member_of fields to the metadata for that log.

The operative word being "could"...I'd still have a bit of work to go from that idea to something I could roll out to my logstash nodes, especially since they run on BSD and Linux where there isn't exactly an option to run "get-aduser".

A First Pass

The AD controller I used for this post is configured to use osg.local as its domain. I've added an OU called "osg_users" and that OU has two users, "testuser" and "charlatan".

"charlatan" is also a member of a group called "InfoSec" and "testuser" is member of a group called "Random Group".

First, I needed to setup a configuration file so I could put directory-specific information in there. The one I wrote for this blog is named conn_info.py and looks like this:

ad_server = "IP_of_AD_controller"

ad_search_user = "osg\charlatan"

ad_search_pass = 'charlatans_password'

ad_search_base = "ou=osg_users,dc=osg,dc=local"

ad_search_props = ['displayname', 'distinguishedname', 'memberof']

Ideally the charlatan user/password would be a service account but it works for this example.

You may assign any manner of properties to the users in your AD or LDAP but displayName, distinguishedName and memberOf are practically universal.

The next step was a script that could connect to and interrogate my AD server.

A really basic script using the ldap3 module and my conn_info.py file might look like this:

#!/usr/bin/env python

from ldap3 import Server, Connection, ALL, NTLM

import conn_info

server = Server(conn_info.ad_server, get_info=ALL)

conn = Connection(server, conn_info.ad_search_user, conn_info.ad_search_pass, auto_bind=True, auto_range=True)

print("starting search...")

try:

searchString = "(objectclass=user)"

conn.search(conn_info.ad_search_base, searchString, attributes=conn_info.ad_search_props, paged_size = 500)

for entry in conn.entries:

print(str(entry))

cookie = conn.result['controls']['1.2.840.113556.1.4.319']['value']['cookie']

while(cookie):

print('receiving next batch')

conn.search(conn_info.ad_search_base, searchString, attributes=conn_info.ad_search_props, paged_size = 500, paged_cookie = cookie)

for entry in conn.entries:

print(str(entry))

cookie = conn.result['controls']['1.2.840.113556.1.4.319']['value']['cookie']

print('searches finished')

except Exception as e:

print("error")

print(e)

exit("exiting...")

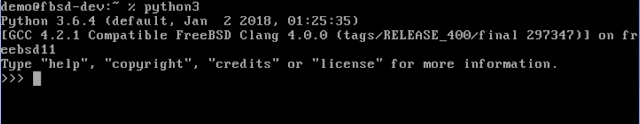

Let's step through that a bit. ldap3 is a fantastic module for interrogating AD and LDAP and it works with both python 2 and python 3. Importing conn_info makes sure we can read variables (I know, constants, and they should be in all caps) from the conn_info.py file. The next few lines connect to the AD server; the auto_bind option saves a step by binding to the server after connecting as the specified user.

Unless the directory configuration is modified, most objects in AD will probably be of either type "user" or type "computer".

"paged_size" is an interesting attribute in that it will limit the results to 500 at a time, then the cookie is used to paginate through to the last result set (hence the "for" loop).

This script doesn't produce output that's useful to logstash but it is really useful to us as people. For my example DC, I get this:

(ldap_post) test@u18:~/ad_ldap_post$ python test.py

starting search...

DN: CN=Just A. Charlatan,OU=osg_users,DC=osg,DC=local - STATUS: Read - READ TIME: 2018-10-15T00:32:44.624881

displayName: Just A. Charlatan

distinguishedName: CN=Just A. Charlatan,OU=osg_users,DC=osg,DC=local

memberOf: CN=InfoSec,DC=osg,DC=local

DN: CN=Testing User,OU=osg_users,DC=osg,DC=local - STATUS: Read - READ TIME: 2018-10-15T00:32:44.625089

displayName: Testing User

distinguishedName: CN=Testing User,OU=osg_users,DC=osg,DC=local

memberOf: CN=Random Group,DC=osg,DC=local

exiting...

Notice how similar this output is to doing:

get-aduser -searchbase "ou=osg_users,dc=osg,dc=local" -filter * -properties displayname, distinguishedname, memberof

Making it More Useful

The real goal is to have something I can read into logstash. The translate module can read CSV, JSON and YAML and, for this purpose, in my opinion the cleanest to read is YAML. Instead of using a library to convert between object types and output YAML, I'm just going to output the YAML directly.

A sample function to make it easy to build the output might look like:

def format_output(user_entry):

entry_string = str(user_entry['samaccountname']) + ":\n"

entry_string += " dn: " + str(user_entry['distinguishedname']) + "\n"

entry_string += " displayname: " + str(user_entry['displayname']) + "\n"

entry_string += " memberof: " + "\n"

for i in user_entry['memberof']:

entry_string += " - " + str(i) + "\n"

return entry_string

With a couple of minor edits, my output now looks like this:

charlatan:

dn: CN=Just A. Charlatan,OU=osg_users,DC=osg,DC=local

displayname: Just A. Charlatan

memberof:

- CN=InfoSec,DC=osg,DC=local

testuser:

dn: CN=Testing User,OU=osg_users,DC=osg,DC=local

displayname: Testing User

memberof:

- CN=Random Group,DC=osg,DC=local

It's important to have memberof output like that as default because as soon as you add users to two or more groups, that's how YAML parsers will expect them to be formatted.

The last step in the script is to write to file instead of outputting to the display. Stripping out the print statements and using file output instead, my script now looks like this:

#!/usr/bin/env python

def format_output(user_entry):

entry_string = str(user_entry['samaccountname']) + ":\n"

entry_string += " dn: " + str(user_entry['distinguishedname']) + "\n"

entry_string += " displayname: " + str(user_entry['displayname']) + "\n"

entry_string += " memberof: " + "\n"

for i in user_entry['memberof']:

entry_string += " - " + str(i) + "\n"

return entry_string

from ldap3 import Server, Connection, ALL, NTLM

import conn_info

server = Server(conn_info.ad_server, get_info=ALL)

conn = Connection(server, conn_info.ad_search_user, conn_info.ad_search_pass, auto_bind=True, auto_range=True)

try:

out_string = ""

searchString = "(objectclass=user)"

conn.search(conn_info.ad_search_base, searchString, attributes=conn_info.ad_search_props, paged_size = 500)

for entry in conn.entries:

out_string = out_string + format_output(entry)

cookie = conn.result['controls']['1.2.840.113556.1.4.319']['value']['cookie']

while(cookie):

conn.search(conn_info.ad_search_base, searchString, attributes=conn_info.ad_search_props, paged_size = 500, paged_cookie = cookie)

for entry in conn.entries:

out_string = out_string + format_output(entry)

cookie = conn.result['controls']['1.2.840.113556.1.4.319']['value']['cookie']

out_fh = open('ad_users_file.yml', 'w')

out_fh.write(out_string)

out_fh.close()

except Exception as e:

print("error: " + e)

exit()

When I run it, I have a new file created called "ad_users_file.yml". It looks like this:

charlatan:

dn: CN=Just A. Charlatan,OU=osg_users,DC=osg,DC=local

displayname: Just A. Charlatan

memberof:

- CN=InfoSec,DC=osg,DC=local

testuser:

dn: CN=Testing User,OU=osg_users,DC=osg,DC=local

displayname: Testing User

memberof:

- CN=Random Group,DC=osg,DC=local

This is ideal for logstash to use as a dictionary - now I can tell it if it ever sees "charlatan" in a field called "user" then add the information in my dictionary to those log files.

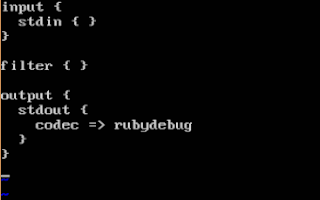

A Sample Logstash Config

Instead of testing with a Windows log or an OpenSSH log, I can have a really simple logstash "testing" config file that takes in user input in JSON format, looks up the information in a dictionary when it sees a field called "user" then sends the resulting object to the display.

That "simple" configuration for logstash, which I'm naming ls_test.conf, could look like this:

input { stdin { codec => json } }

filter {

if [user] {

translate {

field => "user"

destination => "from_ad"

dictionary_path => "ad_users_file.yml"

refresh_behaviour => "replace"

}

}

}

output { stdout { codec => rubydebug } }

Logstash can be started with a specific config file with:

sudo /usr/share/logstash/bin/logstash -f ls_test.conf

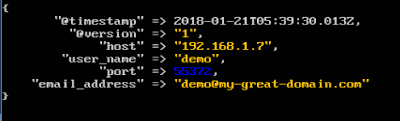

Once it's ready for input, if I use the following two objects then I get a good test of what happens if logstash both does and does not find my object in the dictionary:

{"user":"charlie"}

{"user":"charlatan"}

When I use them, I get the following output:

{"user":"charlie"}

{

"@version" => "1",

"user" => "charlie",

"@timestamp" => 2018-10-15T02:10:06.240Z,

"host" => "u18"

}

{"user":"charlatan"}

{

"@version" => "1",

"user" => "charlatan",

"@timestamp" => 2018-10-15T02:10:14.579Z,

"host" => "u18",

"from_ad" => {

"dn" => "CN=Just A. Charlatan,OU=osg_users,DC=osg,DC=local",

"displayname" => "Just A. Charlatan",

"memberof" => [

[0] "CN=InfoSec,DC=osg,DC=local"

]

}

}

This is exactly what I'd expect to see. Having everything under the "from_ad" field may not be ideal, I can always use a mutate statement to move or rename any field to a more "reasonable" or "usable" place.

Wrapping Up

This is an enrichment technique that I really like and that I recommend implementing anywhere possible. It's also really flexible! I know I've written about it in the context of Active Directory but it's really in the context of any LDAP - Active Directory just happens to be the one folks tend to be familiar with these days. Want to search something by username? Great, here you go! Need to search the rest of your logs and limit it to only members of a given OU? No more waiting for your directory to return all of those objects and search for those, no more retrieving all of your logs and waiting while your SIEM looks for them one-at-a-time in a lookup table *at search time* with potentially out-of-date information - it's all added when the log is cut with the most current information at the time!