Then this week I was at work and was bitten, a bit harder than I found comfortable, by an inode re-use issue with Linux and filebeat and that got me to thinking (I know, how problematic is *that*?!)...

For the impatient curious - the ultimate solution was to create the new file and have filebeat pick it up, then delete it after <x> number of minutes so that it drops out of the filebeat registry before the new file is created. This lets me avoid fiddling with filebeat options and I can control it all from my script (or with cron).

The Issue - inode Re-Use

Before I go into a possible solution, allow me to outline the problem. Filebeat is amazing for watching text files and getting them into the Elastic stack - it has all the TLS and congestion-control bells and whistles, plus it lets you do some interesting things on the client if you're reading JSON files. To make sure it gets your data (and doesn't get your data twice), it has a registry file that makes note of which byte it just read in your file. To know that it's your file, it also keeps track of the name, the inode and the device on which that file resides.

The important part here is the concept of an inode.

Without getting too "into the weeds", an "inode" is a way to address where a file starts on a hard drive. An analogy would be your home. If you want to invite someone over, you give them your address. The equivalent for a file or directory may be its path -- "/var/log/syslog" or "C:\Windows\system32", for example.

However, you could ALSO give them your GPS coordinates. Even if your address changes, your GPS coordinates would remain the same. The equivalent for a file in POSIX-compliant operating systems is an inode number. Just like giving someone your GPS coordinates is kind of a pain, trying to remember inode numbers is a bit of a pain. This is why we don't really use them in day-to-day work but we use filenames and paths all the time. One is really easy for people to remember, one is really problematic.

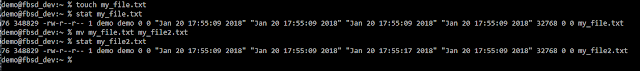

If the town/city/village/whatever renames your street then your address will change, even though your home didn't move - therefore your GPS coordinates won't change. Likewise, if I rename my file from "my_file.txt" to "my_file2.txt", I'm just changing the path and the inode number remains the same. Here is the output of "stat" on FreeBSD. The second column is the inode number:

Here's where it gets really interesting. If I have a file called "my_file.txt", delete it and create a new file called "my_file.txt", the inode number may be the same - even though it's a completely new file with new information. Again, here is the output of doing that and of the 'stat' command:

Notice the inode number is the same for both files. If I have a program, like filebeat, that knows to watch "my_file.txt" with inode '348828', it may read the content of the original file ("hi") and store that it read <n> bytes. Then, even though I deleted the file and have *completely new data* in my new file, because the inode number is the same, it will start reading again at <n + 1> bytes. That means I will have a log entry of "hi" - and then another that says " someone else". Now my SIEM has missing log data!

One Solution - Write Directly to Logstash

This brings me to the fun part of this post - what to do when you really don't need an intermediary and just want to log something directly to logstash. I think Solaris may have been the Last Great Unix in some ways but there isn't a filebeat for Solaris and nxlog (community edition) is a bit of a pain to compile and install. That's okay because you can have something pick up a logfile and send it *as JSON* to your SIEM. Can't get a log shipper approved for install on <x> system but you need output from your scripts? That's okay because you can send that output directly to your SIEM!

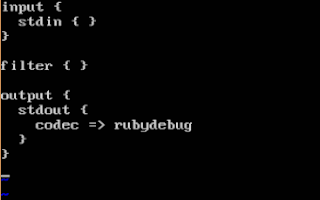

Step One - Logstash Needs to Listen For a TCP Connection

The first step is to setup a TCP listener in logstash. I have logstash 6.1.2 installed from Elastic's "apt" repo and a config file, "test.conf", with just enough in it to be able to test input/output. The "stdin" input type tells logstash to accept from "standard input", in this case my keyboard, and print to "standard output", in this case my monitor. The "rubydebug" code tells logstash to make the output pretty so it's easier for a human to read:

And when I run logstash manually with that configuration using:

sudo -u logstash /usr/share/logstash/bin/logstash --path.config test.conf

I can confirm it works by hitting "enter" a couple of times and then typing in some text (I just wanted to show that it grabs lines where the only input is the "enter" key):

Logstash has a multitude of possible input types. For example, I have some logstash servers that accept syslog from some devices, un-encrypted beats from others, encrypted beats from still others, raw data over UDP from even more and, finally, raw data from TCP from yet *another* set of devices. There are a LOT of possibilities!

NB: logstash will run as the logstash user, not root, so by default it can NOT bind to ports lower than 1024. Generally speaking you want to use high-numbered ports so things "Just Work"!

For this post, I want to tell logstash to listen for TCP connections on port 10001 and I want it to parse that input as JSON. To do this, I add a "TCP" input section. I've also added a comment line above each section telling whether it was in the above configuration so you can more easily see the added section:

Since I'm already logged into that VM, I'm going to go ahead and restart logstash with my custom configuration using the same sudo command as above:

sudo -u logstash /usr/share/logstash/bin/logstash --path.config test.conf

Since I still have the section for stdin as an input, logstash should start and give me a message saying it's waiting for input from "stdin":

Now I'm going to move over to my FreeBSD VM that has python installed to write my test script.

Step Two - The Scripting VM

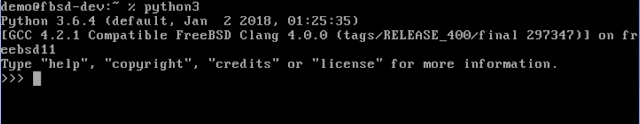

My development VM is running FreeBSD 11 and the only things I have done to it are add a user named 'demo' and installed pkg, vim-lite and python3:

adduser demo

pkg install pkg

pkg install vim-lite

pkg install python3

After logging in, I made sure I could run "python3" and get an interpreter (the ">>>" means it is ready for me to start entering python code):

To exit the interpreter, I can either type "quit()" and hit the enter key or I can hold down the Control key and press 'd'.

With that done, it's time to write my script!

Step Three - Python, JSON and Sockets

I am a very beginner-level python programmer - I know just enough about it to read a little of it, so don't be intimidated if you're new to it. We're going to keep things as basic as possible.

First I need to tell python that I'm either going to read, parse or output JSON. It has a native module for JSON called, appropriately enough, 'json', and all I need to do is "import" it for my script to use it as a library. It also has a module called 'socket' that is used for network programming so I want to include that as well.

import json

import socket

Next I want to build an item to use for testing. I'm going to name it "sample_item" and it's going to have a single field called "user_name". Since my user on the VM is named 'demo', I'm going to use 'demo' as the value for the "user_name" field:

sample_item = { 'user_name': 'demo' }

That's kind of a JSON object but not quite standards-compliant. To make sure it is exactly what I want, I am going to use the json.dumps() function to force it to be converted. Logstash expects each JSON object to be delineated with a newline character ("\n") so I'm going to add one of those to the end and I'm going to save all of that into a new variable called "sample_to_send":

sample_to_send = json.dumps(sample_item) + "\n"

Now I need to create a network connection to my logstash server. I know that it has an IP address of 192.168.1.8 because I checked it earlier, and I know that logstash is listening for TCP connections on port 10001 because I configured it that way. To open that connection in python is a multi-step process. First, I create a socket item that I call "my_socket":

my_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

Then I'm going to tell it to connect to the logstash server:

my_socket.connect(("192.168.1.8", 10001))

If that doesn't give an error then I'm going to try to write the test object, sample_to_send, to the socket. With python 3.x, sendall() takes a *bytes-like* object, not a string, so I need to use the encode() function -- by default it will encode to 'utf-8' which is just fine:

my_socket.sendall(sample_to_send.encode())

Finally I'm going to close my socket and exit the script:

my_socket.close()

exit()

Since I can run all of that in the interpreter, it looks like this:

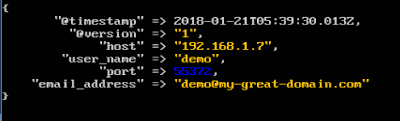

Notice I didn't get an error after the "sendall()" function. Moving back over to my logstash VM I see that I have received something:

192.168.1.7 is indeed the IP address of my FreeBSD VM and my JSON object has been parsed by logstash!

Step Four - Save A Script and Add Another Field

Running things inside the interpreter is pretty handy for testing small things but I want to run this repeatedly so I'm going to save it all in a script. At that point I can do interesting things like add error checking, send multiple items in a loop, etc.

I'm going to name the script "test.py" and it will be pretty much exactly what I ran in the interpreter but with some blank lines thrown in to break it up for readability:

What if I want to add an email address for my user? How would that look? Well, it's just another field in the "sample_item" object (it's called a dictionary but dictionaries are objects...):

sample_item = { 'user_name': 'demo', 'email_address': 'demo@my-great-domain.com' }

In my script it looks like this (notice I've put the new field on a new line - this is purely for readability):

If I modify my script so that "sample_item" looks like the above and run it with "python3 test.py", I should see a new JSON object come in with a field called "email_address":

Sure enough, there it is!

Wrapping Up

This script is in pretty poor shape - for starters, it has zero error handling and it isn't documented. In a production environment I would want to do both of those things, as well as write out any errors to an optional error log on the disk. For now, though, I think it is a good starting point for getting custom data from a script to logstash without needing to rely on additional software like filebeat or nxlog. Never again will I have to look at custom log data sitting on a Solaris server and think, "you know, I'd like to have that in my SIEM but I don't know an easy way to get it there..."

There are some additional benefits, too. I don't *HAVE* to use python to send my events over. I could just as easily have sent data via netcat (nc) or via any other language that supports writing to a network socket (or, for that matter, just writing to file!). Bash even supports doing things like:

echo '{"user_name":"demo"}' > /dev/tcp/192.168.1.8/10001

It does not even need to be bash on Linux - I've tested it on both Linux and FreeBSD, and it even works on Windows 10 using the Windows Subsystem for Linux.

A slightly better-documented version of the script, and the sample logstash configuration, can be found at:

https://github.com/kevinwilcox/python-elk