But don't take MY word for it.

The VM

To make things simple, I want to start my ELK series on an Ubuntu virtual machine. It's well-supported by Elastic and you can use apt for installation/updates, so it uses the native package manager.

This is a pretty basic VM, I've just cloned my UbuntuTemplate VM. 4 CPUs, 4GB of RAM and 10GB of disk. While it is NOT what I'd want to use in production, it is sufficient for tonight's example.

If you're saying, "but wait, I thought the UbuntuTemplate VM only had one CPU!" then yes, you're correct! After I cloned it into a new VM, UbuntuELK, I used VBoxManage to change that to four CPUs:

Next I needed to increase the RAM from 512MB to 4GB. Again, an easy change with VBoxManage!

Then I changed the network type from NAT (the default) to 'bridged', meaning it would have an IP on the same segment of the network as the physical system. Since the VM is running on another system, that lets me SSH to it instead of having to use RDP to interact with it. In the snippet below, "enp9s0" is the network interface on the physical host. If my system had used eth0/eth1/eth<x>, I would have used that instead.

The more I use the VirtualBox command line tools, the happier I am with VirtualBox!

I didn't install openssh as part of the installation process (even though it was an option) because I wasn't thinking about needing it so I DID have to RDP to the system and install/start openssh. That was done with:

sudo apt-get install ssh

sudo systemctl enable ssh

sudo systemctl start ssh

Then I verified it started successfully with:

journalctl -f --unit ssh

Dependencies and APT

As I said, you can install the entire Elastic stack -- logstash, elasticsearch and kibana -- using apt, but only elasticsearch is in the default Ubuntu repositories. To make sure everything gets installed at the current 5.x version, you have to add the Elastic repository to apt's sources.

First, though, logstash and elasticsearch require Java; most documentation I've seen use the webupd8team PPA so that's what all of my ELK systems use. The full directions can be found here:

http://www.webupd8.org/2012/09/install-oracle-java-8-in-ubuntu-via-ppa.html

but to keep things simple, just accept that you need to add their PPA, update apt and install java8.

sudo add-apt-repository ppa:webupd8team/java

sudo apt-get update

sudo apt-get install oracle-java8-installer

Since Java 9 is currently available in pre-release, you'll get a notice when you add their repository. When you do the install you'll get a prompt to accept the Oracle licence. There is a lot that happens with the above three commands so don't be surprised when you see a lot of things happen after each one.

Now for the stack! You can go through the installation documentation for each product at:

https://www.elastic.co/guide/en/logstash/current/installing-logstash.html

https://www.elastic.co/guide/en/elasticsearch/reference/current/deb.html

https://www.elastic.co/guide/en/kibana/current/deb.html

First, add the Elastic GPG key (note piping to apt-key just saves the step of saving the key and then adding it):

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Now add the Elastic repository to apt (alternatively you can create a file of your choosing in /etc/apt/sources.list.d and add from 'deb' to 'main' but I like the approach from the Elastic documentation). This is all one command:

echo "deb https://artifacts.elastic.co/packages/5.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-5.x.list

Update apt:

sudo apt-get update

Now install all three pieces at once:

sudo apt-get install logstash elasticsearch kibana

I had about 170 MB to download so it's not exactly a rapid install!

Now make sure everything starts at startup:

sudo systemctl enable logstash

sudo systemctl enable elasticsearch

sudo systemctl enable kibana

Make Sure It All Chats

By default, logstash doesn't read anything or write anywhere, elasticsearch listens to localhost traffic on port 9200, kibana tries to connect to localhost on port 9200 to read from elasticsearch and kibana is only available to a browser on localhost at port 5601. Let's address each of these separately.

elasticsearch

Since elasticsearch is the storage location used by both logstash and kibana, I want to make sure it's running first. I can start it with:

sudo systemctl start elasticsearch

Then make sure it's running with:

journalctl -f --unit elasticsearch

The last line should say, "Started Elasticsearch.".

kibana

Kibana can read from elasticsearch, even if nothing is there. Since I need to be able to access kibana from another system, I want kibana to listen on the system's IP address. This is configurable (along with a lot of other things) in "/etc/kibana/kibana.yml" using the "server.host" option. I just add a line at the bottom of the file with the IP of the system (in this case, 192.168.1.107):

Then start it with:

sudo systemctl start kibana

You can check on the status with journalctl:

journalctl -f --unit kibana

I decided to point my browser at that host and see if kibana was listening as intended:

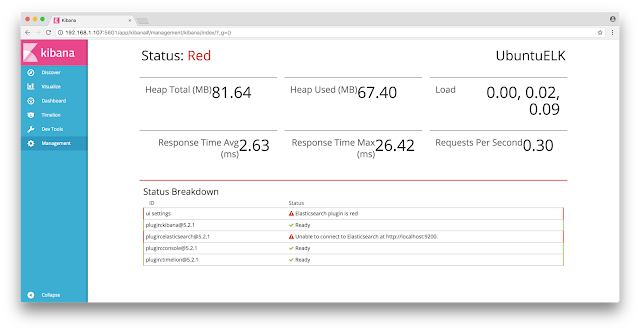

So it is! This page also indicates elasticsearch can be reached by kibana, otherwise I'd see something like this (again, this is BAD and means elasticsearch can't be reached!):

logstash

For testing, I want an easy-to-troubleshoot logstash configuration. The simplest I can think of is to read in /var/log/auth.log and push that to elasticsearch. To do that, I added a file called "basic.conf" to /etc/logstash/conf.d/ (you can name it anything you want, the default configuration reads any file put in that directory). In that file I have:

input { file { path => "/var/log/auth.log" } }

filter { }

output { elasticsearch { hosts => ["localhost:9200"] } }

This says to read the file "/var/log/auth.log", the default location for storing logins/logouts, and push it to the elasticsearch instance running on the local system.

On Ubuntu, /var/log/auth.log is owned by root and readable by the 'adm' group, but logstash can't read the file because it runs as the logstash user. To remedy this I add the logstash user to the adm group by editing /etc/group (you can also use usermod).

With the config and group change in place, I started logstash:

sudo systemctl start logstash

Then I checked kibana again:

Notice the bottom line changed from 'Unable to fetch mapping' to 'Create'! That means:

- logstash could read /var/log/auth.log

- logstash created the default index of logstash-<date> in elasticsearch

- kibana can read the logstash-<date> index from elasticsearch

Now I know everything can chat to each other! If you click "Create", you can see the metadata for the index that was created (and you have to do this if you want to query that index):

The First Query

Now that I've gone through all of this effort, it would be nice to see what ELK made of my logfile. I technically told logstash to only read additions to the auth log so I need to make sure it gets something - in my terminal window I typed "sudo dmesg" so that sudo would log the command.

To do a search, click "Discover" on the left pane in kibana. That gives you the latest 500 entries in the index. I got this:

Notice this gives a time vs. occurrence graph that lets you know how many items were added to the index in the last 15 minutes (defaults). I'm going to cheat just a little bit and do a search for both the words "sudo" and "command" since I know sudo commands are logged with the word "command" so I replace '*' with 'sudo AND command' in the search bar (the 'AND' is important):

The top result is my 'dmesg'; let's click the arrow beside of that entry and take a better look at it:

The actual log entry is in the 'message' field and indeed the user 'demo' did execute 'sudo dmesg'! One of my favourite things about this entry is the link between the entry and the Table version -- this link can be shared with others so if you search for something specific and want to send someone else directly to the result, you can just send them that link!

Wrapping It Up

This is a REALLY basic example of getting the entire ELK stack running on one system to read one log file. Yes, it seems like a lot of work, especially just to read ONE file, but remember - this is a starting point. I could have given logstash an expression, like '/var/log/*.log', and had it read everything - then query everything with one search.

With a little more effort I can teach logstash how to read the auth log so that I can do interesting things like searching for all of the unique sudo commands by the demo user. With a little MORE effort after that, I can have a graph that shows the number of times each command was issued by the demo user. The tools are all there, I just have to start tweaking them.

I do get it, though, it can seem like a lot of work the first few times to make everything work together. A friend of mine, Phil Hagen, is author for a SANS class on network forensics and he uses ELK *heavily* - so heavily that he has a virtual machine with a customised ELK installation that he uses in class. He's kind enough to provide that VM to the world!

He has lots of documentation there and full instructions for how to get log data into SOF-ELK. I've seen it run happily with hundreds of thousands of lines of logs fed to it and I've heard reports of millions of lines of logs processed with it.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.