This post is a brief overview of some elasticsearch concepts and some very basic configuration settings/interactions. It is not a deployment guide or user's reference.

If you're Unix- or Linux-adept, you've probably used curl or wget. If not, that's okay! All you need to know about them is that they are used on the command-line on Unix-like systems to make requests of web servers. I will use curl in a couple of examples but almost anything that interacts with a web server will work - curl, wget, powershell libraries, python libraries, perl libraries, even a graphical web browser (like Chrome or Edge) to some degree. The key thing is that you don't need to be familiar with curl yet so don't feel overwhelmed when you see it.

A Too-Wordy Overview

If you really want to make things, well, boring, you can describe elasticsearch and hit pretty well all of the current buzz words: cross-platform, Lucene-based, schema-free, web-scale storage engine with native replication and full-text search capabilities.

I don't like buzz word descriptions, either.

In a little less manager-y phrasing, it's for storing stuff. You can run it on several systems as a cluster. It does cool things like breaking stuff into parts and storing those parts on multiple systems, so you can get pretty speedy searches and it's okay to take systems down for maintenance without having to worry about stopping the applications that rely on it. You can send stuff to and retrieve from it using tools like wget and curl. If you need it to grow then you can do that, too, by adding servers to the cluster, and it will automatically balance what it has stored across the new server(s).

It can work in almost any environment. It's written in Java and you can run it on Linux, BSD, macOS and Windows Server. I use Ubuntu for all of my elasticsearch systems but I'm testing it on Windows Server 2012 (sorry, I'm doing that at work, so no blog posts on it unless Microsoft donates a license for me to use or I decide to pay for some Windows Server VMs). Want to use Amazon's S3 to store a backup of your data? Yep, it does that, too. You can specify an S3 bucket natively to store a backup or to restore with no additional tools.

Okay, I think that's probably enough on that.

Data Breakdown

There is a LOT of terminology that goes around elasticsearch. For now, here's what you need to know:

document - this is a single object in elasticsearch, similar to a row in a spreadsheet

shard - a group of related documents

index - a group of related shards

node - a server running elasticsearch

cluster - a group of related nodes that share information

At this point elasticsearch users are probably screaming at me because I've left out a lot of nuance. Know these are generalisations for beginners. If you stick with this blog I'll get more precise over time. In this context my single VM is both a node and a cluster - it's a cluster of one node. It will have an index that holds the sample log data I want to store. That index will be split into shards that can be moved between nodes for fault tolerance and faster searching. Those shards will contain documents and each document will be a single line of log data.

A Default Node

In Beginning ELK Part One, I installed elasticsearch on Ubuntu using Elastic's apt repository. I'm not using THAT VM for this post but I've installed the entire stack using that post for consistency.

The default installation on Ubuntu is usable from the start if you want to use it for testing or non-networked projects. If you have an application that speaks elasticsearch then you can pretty well install it, tell your application to connect via localhost and be in business. For right now I'm running it with the default configuration. I booted the VM, checked to make sure there were no elasticsearch processes running via "ps", started it with systemctl (I used restart out of habit, start would have been better) and used journalctl to see if it logged any errors on startup:

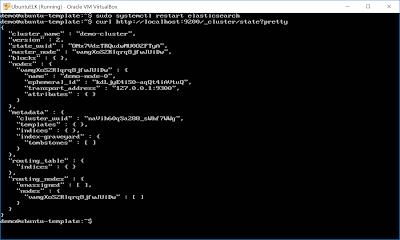

Next I used curl to query the running instance and get its "health" status. ES uses a traffic light protocol for status so it's either red, yellow or green. Without getting too far into what each means, for starters just know red is bad, yellow is degraded and green is good. The general format of a curl request is "curl <optional request type> <URL>". In the URL you'll see "?pretty" -- this tells elasticsearch to use an output that *I* find easier to read. You're welcome to try it with and without that option to see which you prefer!

Notice the value of "cluster_name". By default, ES starts with a cluster name of "elasticsearch". This is one of the things I'll change later. If you only have one cluster then it's not a big deal but if you have multiple clusters I highly recommend changing that. I recommend changing it anyway because if you have one cluster you're probably going to eventually have more!

"number_of_nodes" indicates how many systems running ES are currently in the cluster. That's a slight abuse of the terminology since you can run multiple nodes on a large system but most people will run one ES instance on each server.

"number_of_data_nodes" indicates how many of the ES nodes are used for storing data. ES has multiple node types - some store data, some maintain the administrative tasks of the cluster and some allow you to search across multiple cluster. This indicates how many nodes that store data are available in the cluster.

Once you're a little more familiar with elasticsearch, or if you want to know specific information about each node, another useful query is for the cluster "stats". It has a LOT of output, even for a quiet one-node cluster, so I'm only showing the first page that is returned (since it's chopped off, I just replaced "health" with "stats"):

Some Slight Changes

Especially for the loads I'm going to put on my single-node cluster, there are VERY FEW changes to make to the ES configuration file. One of the themes running through most of the community questions I've seen asked is that people try to toggle too many settings without understanding what they will do "at scale".

For this cluster, I'm only going to make two settings changes - I'm going to name my cluster and name my node. In my next post I'll add another node to the cluster and make a few more changes but this is sufficient for now. These changes are made in

/etc/elasticsearch/elasticsearch.yml

The elasticsearch directory is not world-readable so tab-complete may not work.

When I edit config files I like to put my changes at the end. If this were a file managed by, e.g., puppet, it wouldn't matter because I'd push my own file but for these one-offs I think it's easiest to read (and update later...) when my changes are grouped at the end. To make things simple and readable, I'm going to name this cluster "demo-cluster" and this node "demo-node-0". By default it will be both a master node, meaning it controls/monitors cluster state, and a data node, so it will store data.

cluster.name: demo-cluster

demo.name: demo-node-0

In the actual config file, this looks like:

Now when I restart elasticsearch and ask for cluster information, I get much more useful output:

If you take a close look, you'll see the cluster_name has been set to "demo-cluster" and, under "nodes", the name for my one node is set to "demo-node-0".

My First Index and Document

Right now my ES node doesn't have an index (at least, not that isn't for node/cluster control) yet. You can create an index on the fly with no structure by writing something. For example, if I want to create an index called "demo_index" and insert a document with a field called "message_text" with a value of "a bit of text", I can do that by using curl to POST a JSON object as data to elasticsearch and I can do it all at one time. If you know what you want your index to look like ahead of time, instead of being "free form", you can create it ahead of time with specific requirements. That is a better practice than what I'm doing here.

In the below example, "-XPOST" means to do an HTTP post, "9200" is the port elasticsearch runs on by default and the stuff after "-d" is the data I want to write. Note it must be a valid JSON object and I've surrounded it with single quotes, that I'm using "demo_index/free_text", meaning this document has a type of "free_text", and I'm specifying "?pretty" so that the results are formatted for reading:

curl -XPOST http://localhost:9200/demo_index/free_text?pretty -d '{"message_text":"a bit of text"}'

When I run it on my node, I get the following:

First of all, yes, the output is kind of ugly - that's because elasticsearch returns a JSON object. If you inspect it, the important items are:

_index: demo_index

result: created

This means the demo_index was successfully created!

A Simple Search

To query elasticsearch and see what it thinks the "demo_index" should look like, I just need to change the curl command a wee bit (note I removed the "-X" argument - the default curl action is a GET so specifying -XGET is extraneous, and I've added the ?pretty option so it's easier to read):

curl http://localhost:9200/demo_index?pretty

Elasticsearch returns the following:

The first segment describes "demo_index". There is one mapping associated with the index and it has a property called "message_text" that is of type "text". Mappings allow you to change the type of those properties so, for example, you can specify a property should always be an integer or text.

The second segment lets me know that "demo_index" is an index, that it has five associated shards (so the data is broken into five segments), that each shard is stored twice (number_of_replicas), the Unix-style timestamp of when it was created and that its name is "demo_index". The number of shards and number of replicas per index can be changed on the fly or in an "index template" but that's a bit more advanced than we need to go right now!

If I want to query elasticsearch for my data, I can have it search for everything (if you're used to SQL databases, it's like doing a "select * from demo_index"):

curl http://localhost:9200/demo_index/_search?pretty

This returns the entire index:

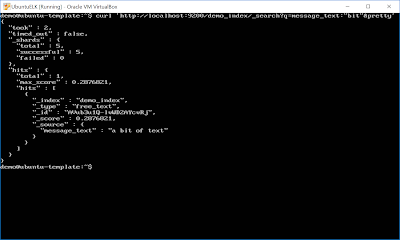

I may not want to have ES dump EVERYTHING, though. If I know I have a property called "message_text" and I want to search for the word "bit" - that search builds on the previous command by specifying a query value (noted as "q"):

curl 'http://localhost:9200/demo_index/_search?q=message_text:"bit"&pretty'

When this runs, I get:

This lets me know it was able to successfully query all five shards and that one document matched the word "bit". It then returned that document.

Now Delete It

You can delete individual documents using the _delete_by_query API (or if you have the ID of the document) but that's for another post because it goes into some JSON handling that is beyond simple interaction. For now, since I'm dealing with a test index, I just want to delete the entire index. I can do that with:

curl -XDELETE http://localhost:9200/demo_index?pretty

When I run the above, I get the following:

It can take up to several minutes for the command to be replicated to all of the data nodes in a large cluster. As before, I can check the index status with curl:

For those who may be curious, yes, you can list all of the indexes in your cluster:

Wrapping Up

I know, I've covered a LOT in this post! There's some information on elasticsearch in general and how to create, query and delete indexes. There is curl, which may be new to some readers. It's a lot to take in and I've just scratched the surface of what you can do with elasticsearch using only curl. The Elastic documentation is fantastic and I highly recommend at least perusing it if you think ES may be useful in your environment:

https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html

No comments:

Post a Comment

Note: only a member of this blog may post a comment.